LLM Developers Face Cost, Energy, and Other Challenges Bringing Products to Market, Insiders Say

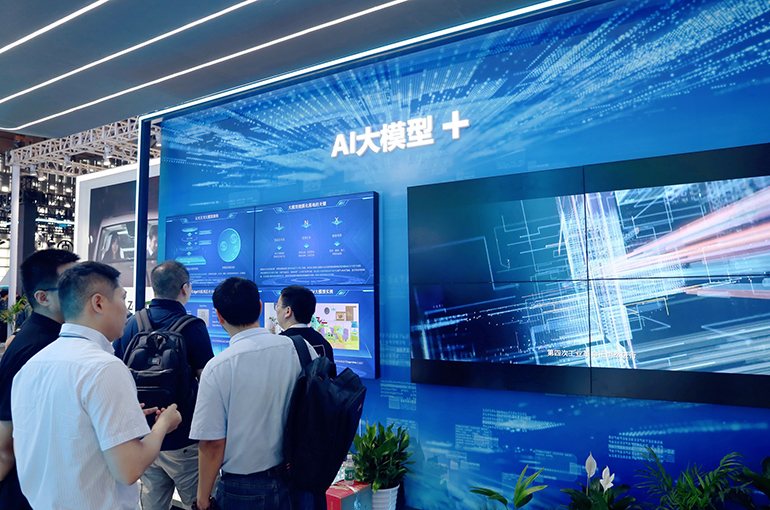

LLM Developers Face Cost, Energy, and Other Challenges Bringing Products to Market, Insiders Say(Yicai Global) July 27 -- Developers of large language models come up against a number of challenges when commercializing and promoting the benefits of their products, including the cost of computing power, data security, and energy use, according to people in the industry.

Vast computing power is needed to train and use LLMs, said Chen Weiliang, founder of graphics processing unit designer MetaX Integrated Circuits. So failing to lower the associated cost will restrict the extent to which LLMs are used, added Chen, who is also chairman and chief executive of the Shanghai-based company.

Training the GPT-3 model cost about USD1.4 million, while for some bigger LLMs, the expense is between USD2 million and USD12 million, according to estimates by Guosheng Securities. OpenAI's ChatGPT, the best known GPT model, was used by an average of about 13 million unique users a day in January, requiring the support of over 30,000 Nvidia's A100 GPUs with an initial input cost of USD800 million and a daily electricity bill of about USD50,000.

But computing power costs will fall further with an increase in the utlilization rate of GPUs, according to Chen.

Many firms in artificial intelligence need to convert existing academic resources into models ready for actual business application, said Peng Yao, who set up Shanghai Supermind Intelligent Technology, an AI-based video analysis startup. Besides the expense of computing power, how to create an advantage in industry data is another challenge, including how to label professionally processed data, he said.

Not many people can annotate professional data, and most cross-industry partnerships aim to solve data labeling issues. Huawei Technologies came up with the AI medical model Uni-Talk in collaboration with China Unicom's Shanghai branch, Huashan Hospital of Fudan University, and other partners.

The so-called small models that focus on a specific industries and have fewer training parameters are likely a more economical solution, according to some in the industry. But these models also face issues of data annotation in their application, with the lack of related talent still needing to be resolved, they note.

LLMs tend to have long latency when reasoning with high energy consumption and carbon emissions, others pointed out, adding that all these limit their end-use.

Editors: Shi Yi, Martin Kadiev