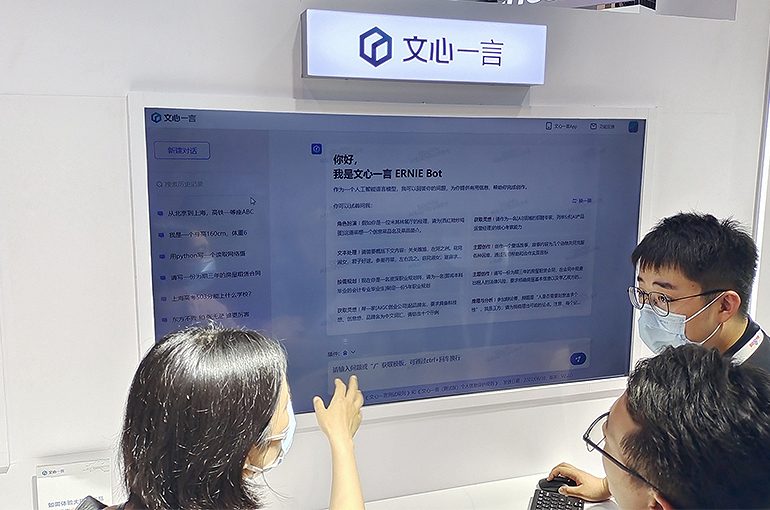

Baidu Follows Alibaba’s Steep LLM Price Cut With Two Free Ernie Bot Models

Baidu Follows Alibaba’s Steep LLM Price Cut With Two Free Ernie Bot Models(Yicai) May 22 -- Baidu has made two of its Ernie Bot large language models free of charge, joining a price war in China’s artificial intelligence market that intensified after Alibaba's cloud unit revealed price cuts of as much as 97 percent on its generative AI models.

Ernie Speed and Ernie Lite are now free of charge for all business users, Beijing-based Baidu said in a statement yesterday.

Just a few hours earlier, Alibaba Cloud Intelligence Group slashed the application programming interface input price of Qwen-Long, part of its Tongyi Qianwen’s LLM series, to 0.05 Chinese cents per 1,000 tokens, which is equal to CNY1 for 2 million tokens. Qwen-Long now costs about one-400th of the price for OpenAI’s GPT-4.

“If one internet company cuts prices, the others have to follow or risk falling behind,” Zhang Junlin, head of research and development for new technologies at Sina Weibo, told Yicai.

The wave of price cuts began earlier this month. High-Flyer, one of China’s biggest quant hedge funds, said on May 6 that the input and output prices of its LLM would be CNY1 (14 US cents) per 1 million tokens and CNY2 per 1 million tokens, about one-100th of that for GPT-4.

Then on May 13, Zhipu AI Open Platform introduced a new pricing system, lowering the price for its entry-level AI models by 80 percent to CNY1 per 1 million tokens.

Two days later, Volcano Engine, the cloud computing services unit of TikTok owner ByteDance, officially released the Doubao AI model. Its main enterprise model costs 0.08 Chinese cents (0.01 US cents) per 1,000 tokens, about 99 percent below the industry average.

Following Alibaba Cloud’s price cut yesterday, a Volcano Engine representative told Yicai that it welcomed the move, saying it will help companies explore AI transformation at lower cost and hasten the implementation of LLMs application scenarios.

Lower prices are a way for LLM providers to get more customers to use their products, said Zhang Yi, a cloud computing analyst at Canalys. Many of those that have cut prices supply cloud computing services too, which means they also aim to boost demand for that, Zhang added.

LLMs will redefine cloud computing-based tools and greatly improve their efficiency, offering businesses greater cost-effectiveness and more convenient cloud products.

Zhang noted that while the price cuts may attract some customers in the short term, in the long run, LLMs still face the challenge of generating more practical value to entice a broader user base.

Editor: Futura Costaglione